In this article , We’ll cover my end of year project consisting of implementing a DevSecOps pipeline for a data engineering application.

Tools used:

- Gitlab CI/CD

- Amazon Web Services

- Terraform

- SonarQube

- Horusec

- Trivy

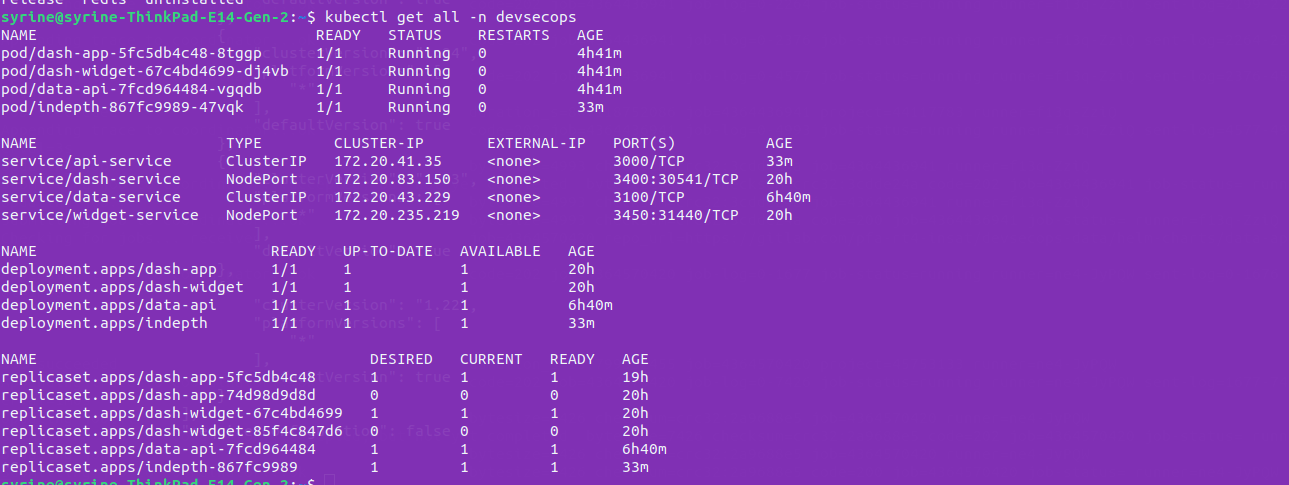

Application Architecture

Our project consists of 4 micro-services:

- A service that serves as Front end and handles the Login feature.

- A service that serves multiple widgets that enable the functionalities of the Client dashboard.

- A Nest Rest API that handles the Login and manages the required configs.

- An API for the Widgets , enabling the calculation of various statistics.

This project also consists of 2 different databases :

- Clickhouse, an analytics database that provides statistics and informations that serves our Widget API.

- A PostgreSQL database that stores user informations and configurations. This serves the Nest API.

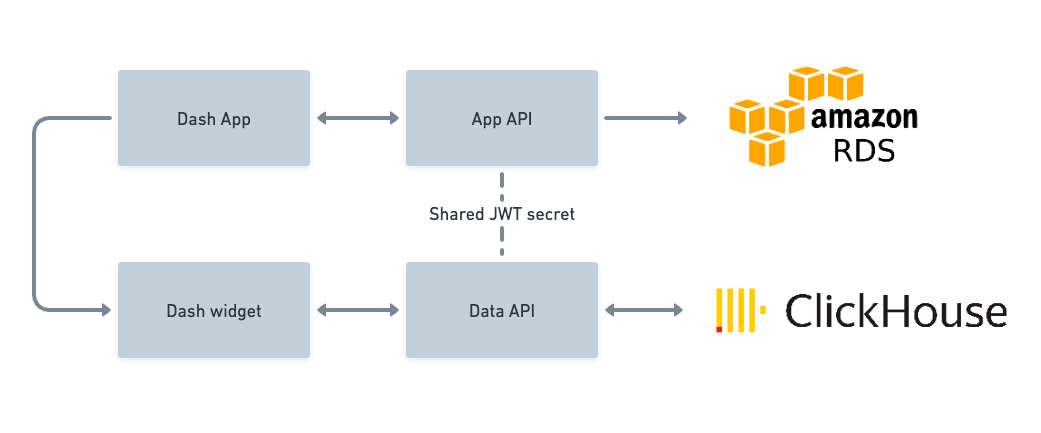

Architecture and specification of the infrastructure

Our AWS infrastructure is designed with high availability and redundancy in mind. The architecture is situated within a single Virtual Private Cloud (VPC), an isolated and secure environment within AWS where resources are launched. This VPC spans across two Availability Zones (AZs) for redundancy, ensuring that the system remains robust and resilient even if one AZ encounters issues.

Each AZ in this setup includes two types of subnets — public and private. The public subnet houses a NAT Gateway, which enables instances in the private subnet to access the internet while remaining secure and private.

Within the private subnet, we have Amazon Elastic Kubernetes Service (EKS), Amazon Relational Database Service (RDS), and Amazon Elastic Compute Cloud (EC2) instance for clickhouse.

This AWS architecture represents a robust and resilient design by leveraging different AWS services in conjunction with the advantages of public and private subnets for security and high availability across multiple AZs.

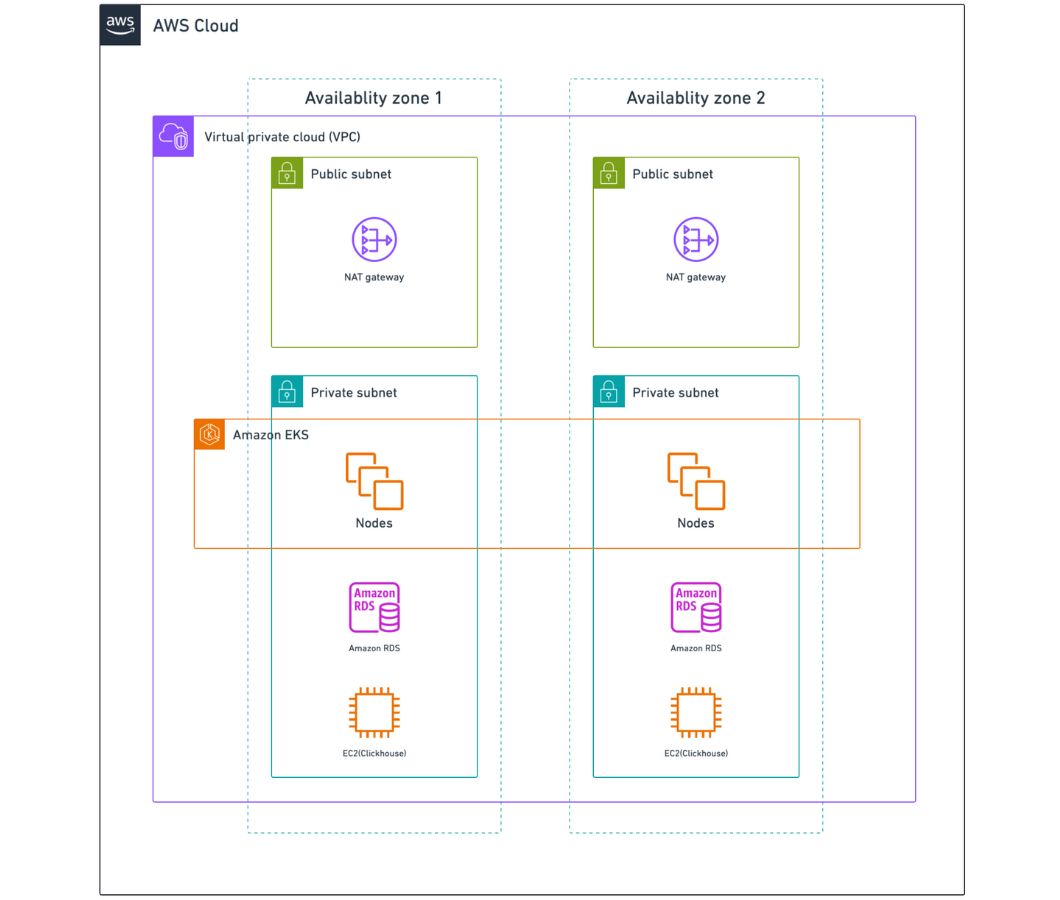

Infrastructure provisioning

The infrastructure provision of our application, specifically an EKS (Elastic Kubernetes Service) cluster, is achieved using Terraform. Terraform is a solution for infrastructure as code that enables declarative management of our infrastructure resources.

Using Terraform configuration files, we specified the desired state of our infrastructure. The EKS cluster, related networking components, security groups, IAM roles, and any other essential infrastructure components were all detailed in these files, along with the appropriate resources. This resulted in the creation of the EKS cluster and all the defined resources within our AWS environment.

Continuous integration and continuous delivery pipeline

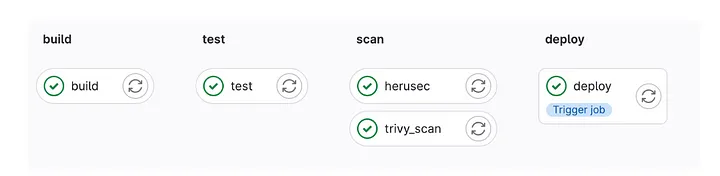

The structure of our Continuous Integration/Continuous Deployment (CI/CD) pipeline is composed of four essential stages.

- Build : During this stage, the docker image is built and pushed to gitlab registry. The build job is triggered only in dev and main branches. GitLab Container Registry provides a secure and integrated solution for managing Docker images within the GitLab platform.

- Test : During this phase, unit tests and end-to-end (e2e) tests are executed. It is important to note that the test job is triggered only in the feature branch.

- Scan : In this stage, we implement Static Application Security Testing (SAST) using Horusec, alongside image analysis through Trivy. This stage is broken down into two specific tasks : the horusec job and the trivy-scan job. Each job is assigned its specific scanning type, upon completion the results are uploaded directly to DefectDojo. The scan jobs are triggered only in dev and main branches

- Deploy : In this stage a Downstream pipeline is triggered. The downstream pipeline is located in the Helm chart repository associated with each microservice. Using this approach we guaranteed the separation of the code and its deployment files. The Helm chart is deployed into the Kubernetes Cluster by the downstream pipeline after receiving the docker image created by the main pipeline.

Security measures integration

1. Security orchestration and vulnerabilities display

In order to properly visualize and manage the vulnerabilities, we used DefectDojo. We deployed it on an Amazon Web Services (AWS) EC2 instance to strengthen our operational efficacy.

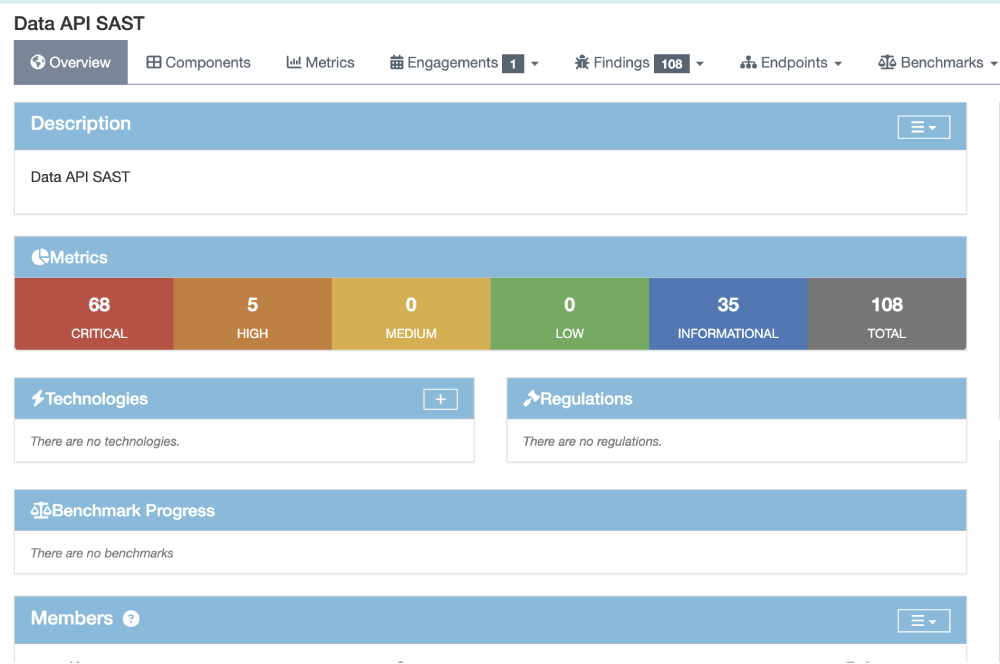

2. Static application security testing (SAST)

We first used SonarQube Community edition; It offers some basic security features, its focus primarily lies in code quality and general static analysis. Due to these limitations, we didn’t find pertinent result. In fact, the tool did not detect any vulnerabilities in any of the microservices and all the test were tagged as passed.

We then used Horusec; The Horusec job is a part of the Scan stage. It is triggered only in the main and dev branches. The execution of this job begins with the initiation of the Horusec command, which initiates the analysis process. We specify the output file(sast-report.json) to which the scan result will be sent and we choose JSON as the output format, allowing for easy integration with DefecDojo. Additionally, we ensure that the severity information is included in the final result. Next, we leverage DefectDojo’s REST API endpoint to upload the json file (sast-report.json) created in the previous step. This process involves including essential details such as the product name, scan type (Horusec Scan), and DefectDojo token for authentication. By following this approach, we seamlessly transfer the file to DefectDojo.

Using Horusec, we’ve obtained relevant findings regarding the security of our applications.

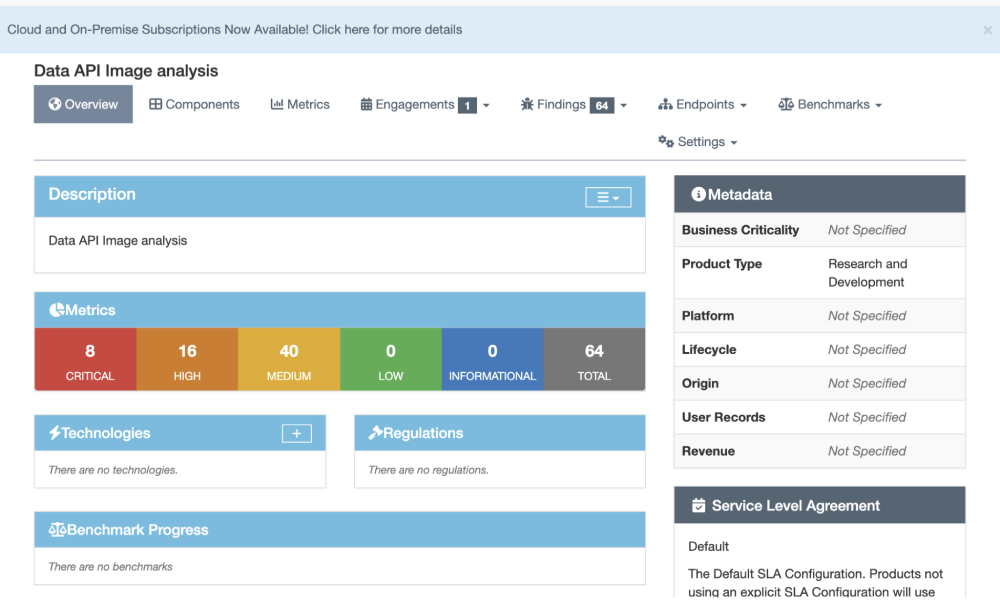

3. Image analysis with Trivy

The Trivy job is a part of the Scan stage, triggered only in the main and dev branches. It starts by pulling the docker image from the previous build stage. Then, we run the Trivy command to analyze the image. The scan result is saved in the output file (trivy-report.json) in JSON format for easy integration with DefectDojo. Next, we use DefectDojo’s REST API endpoint to upload the trivy-report.json file. We provide essential details like the product name, scan type (Trivy Scan), and DefectDojo token for authentication. This allows us to seamlessly transfer the file to DefectDojo.

Using Trivy, we’ve obtained relevant findings about the security of our built images.

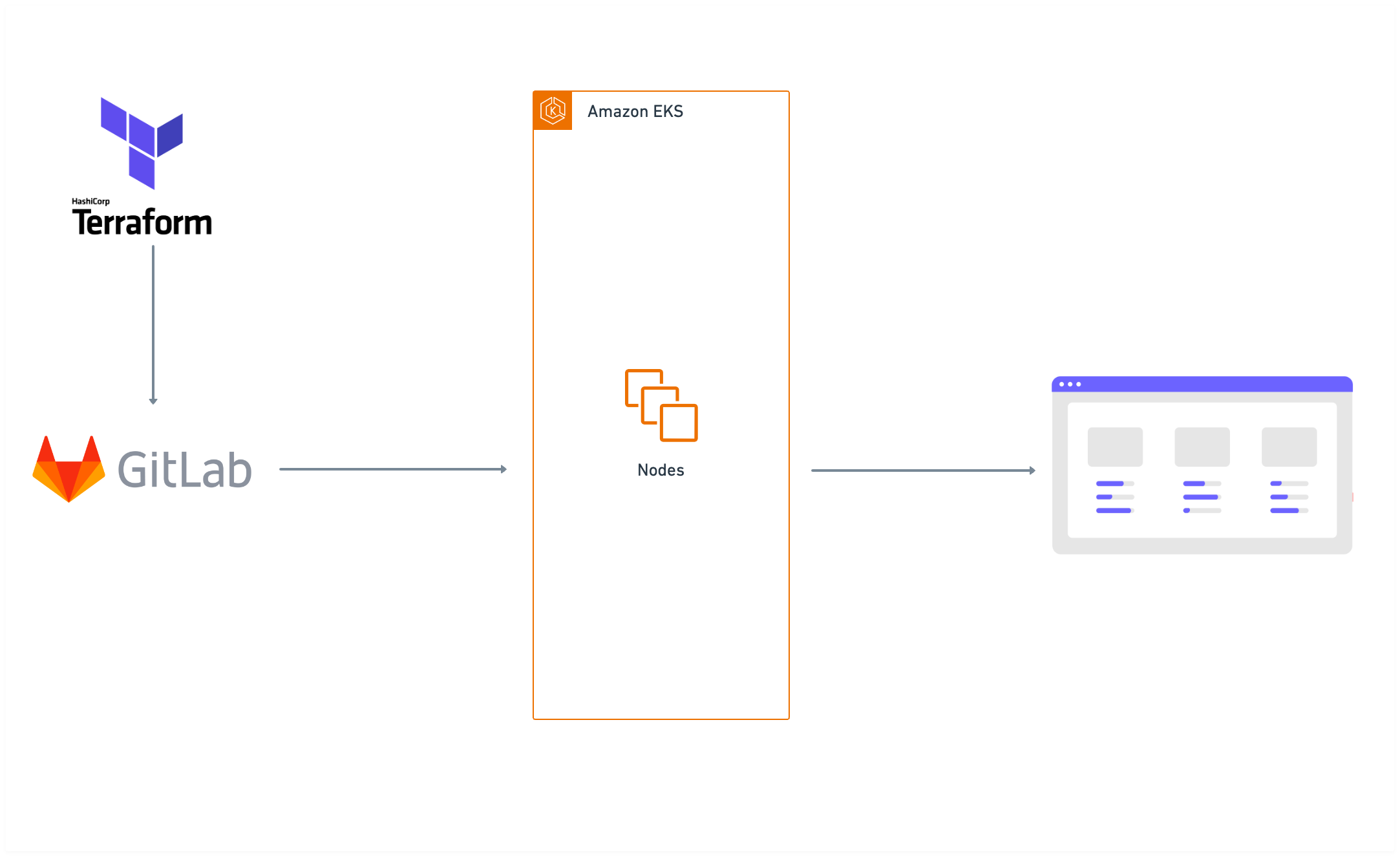

Microservices deployment

For the deployment, we used Helm charts to deploy each microservice of our application. A Helm chart is a package that contains all the necessary resources to deploy an application to a Kubernetes cluster. This includes YAML configuration files for deployments, services, secrets, and config maps that define the desired state of your application.

Helm charts for each microservice are kept in separate repositories. A deployment is triggered after the docker images are built and the security tests are verified. As we can see in the following figure, we were able to deploy all the microservice to the EKS cluster provisioned in a previous step.

After the deployment of all Helm charts , all the components are deployed and running.